Search Engine Optimization (SEO) is much needed for the accessibility and ranking of a website on search engines. The robots.txt file is a critical component of search engine optimization (SEO) that is frequently disregarded.

This small but powerful file directs web crawlers, or bots, on how to explore a website. In this article, we'll break down everything you need to know about what is robots.txt, why it's important, how it works, and its impact on SEO.

Table of Contents |

What is a Robots.txt File?

A robots.txt file is a text file in a website's root directory containing instructions for search engine bots. It acts as a gatekeeper, telling bots which parts of a website they can and cannot visit. This file is integral to the Robots Exclusion Protocol, a standard website for communicating with web crawlers and other robots.

What is the Importance of Creating a Robots.txt File?

Understanding what is the importance of creating robots.txt file is crucial for website owners. A well-set robots.txt file manages the crawl budget by filtering irrelevant pages, allowing search engines to prioritize ranking-relevant content.

-

Crawl Budget Optimization

Google gives each website a "crawl budget" that limits how many pages they crawl in a given time. By blocking less essential pages in robots.txt, you can ensure search engines crawl your most valuable pages.

-

Manage Indexing

Admin parts and duplicate material don't need search engine indexing. A robots.txt file controls which website sections crawlers can see, keeping your site clean and optimized for search engines.

-

SEO Impact

Optimizing robots.txt can improve website SEO. Blocking irrelevant or non-SEO-friendly pages helps search engines focus on relevant material, improving your SERP ranks.

What Does Robots.txt Do?

A robots.txt file serves several functions that can directly impact your website's performance and search engine visibility.

1. Directs Bots

The primary purpose of a robots.txt file is to give instructions to web crawlers on how they should interact with your website. It specifies which parts of the site the bots are allowed or disallowed to crawl.

2. Prevents Server Overload

A robots.txt file helps reduce your server's load by restricting bots from crawling unnecessary pages. It is very important for websites that don't have many server tools.

3. Protects Sensitive Information

Certain parts of a website, like internal search results, private user data, or unfinished content, should not be publicly accessible. A robots.txt file can block bots from crawling these sensitive areas.

What is the Use of robots.txt in SEO?

The robots.txt file significantly optimizes SEO by giving website owners control over how bots interact with their site.

1. Blocking Non-SEO-Friendly Pages

Many websites have pages not meant to be indexed by search engines, such as internal admin pages or duplicate content. Using robots.txt to block these pages ensures that only valuable content is indexed, improving your website's overall SEO performance.

2. Managing Duplicate Content

Duplicate content can harm your SEO rankings. By telling crawlers not to index pages with similar or exact content in robots.txt, you can keep your site from getting penalties that could hurt its visibility.

3. Improving Crawl Efficiency

Crawlers have a limited time to explore a website. By using robots.txt to focus their efforts on the most critical pages, you improve the overall efficiency of the crawling process.

What is 'Disallow' in robots.txt?

The Disallow directive in robots.txt blocks bots from accessing specific sections of a website. For example, if you don't want search engines to crawl your login page, you can add the following line to your robots.txt file:

javascript

Copy code

User-agent: *

Disallow: /login/

This tells bots to avoid the "/login/" page, ensuring it doesn't appear in search results.

Impact on SEO

The Disallow directive can significantly impact your SEO strategy by keeping irrelevant or harmful content from the search engine index. However, it's essential to use it carefully; disallowing important pages can hurt your site's visibility.

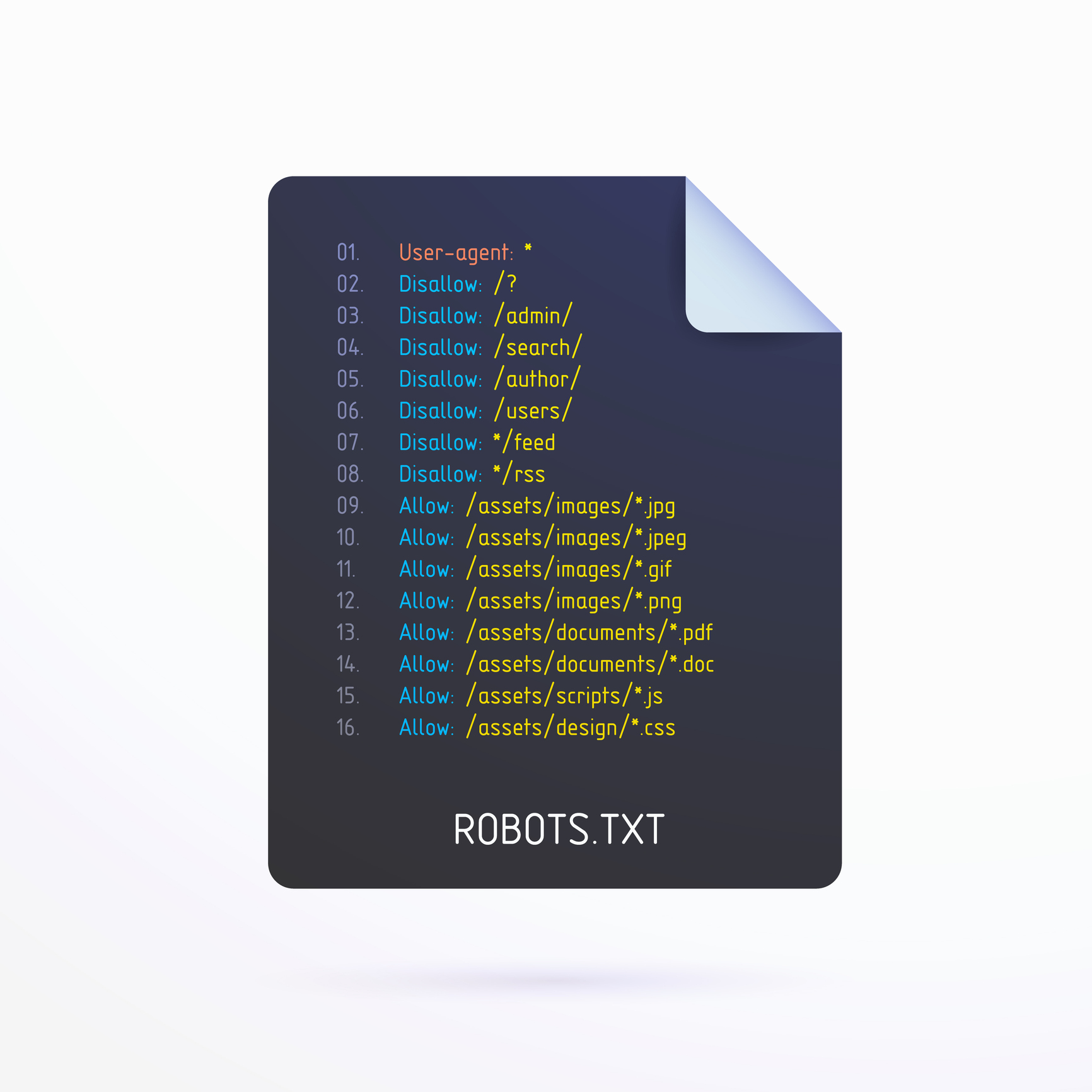

Robots.txt File Example

Here's a simple robots.txt file example:

javascript

Copy code

User-agent: *

Disallow: /admin/

Disallow: /private/

Allow: /public/

Sitemap: http://www.example.com/sitemap.xml

In this example:

- All bots (denoted by *) are disallowed from accessing the "/admin/" and "/private/" directories.

- Bots are allowed to access the "/public/" directory.

- The file also references the sitemap, which helps search engines find essential pages to crawl.

Purpose and Best Practices for Robots.txt

The primary purpose of a robots.txt file is to guide bots on how to crawl your website efficiently. Here are some best practices for creating a practical robots.txt file:

1. Place the File in the Root Directory: Ensure the robots.txt file is in your website's root directory.

2. Test the File Regularly: Use Google Search Console or other tools to test your robots.txt file and ensure it works as expected.

3. Don't Block Essential Pages: Avoid blocking pages crucial for SEO, like important product or category pages.

4. Use the Sitemap Directive: Always include a link to your sitemap within the robots.txt file to help crawlers navigate your site efficiently.

Common Mistakes to Avoid with Robots.txt

1. Disallowing All Bots by Mistake: A standard error is to accidentally block all bots from crawling your site by adding the following line:

makefile

Copy code

Disallow: /

This directive tells bots to avoid your entire site, which can result in your site disappearing from search results.

2. Blocking Important Resources: Blocking CSS or JavaScript files can prevent search engines from adequately rendering your pages, negatively impacting rankings.

3. Forgetting to Update the File: As your website evolves, update your robots.txt file to reflect your site's structure and changes in content.

Also Read- You Don’t Need Robots.txt On Root Domain, Says Google

Conclusion

One of the most essential tools in search engine optimization (SEO) is the robots.txt file, which allows you to manage how search engine bots interact with your website. Optimizing your robots.txt directives can improve your website's crawl efficiency, protect sensitive information, and improve its overall SEO performance. When it comes to ensuring that your robots.txt file supports your SEO goals, it is essential always to follow best practices, verify your file regularly, and prevent common mistakes.

FAQs

Q1. What is robots.txt?

Ans: A robots.txt file has directions for search engine bots. It tells them which parts of a website are allowed or not to crawl.

Q2. Why is it essential to have a robots.txt file?

Ans: The robots.txt file is crucial for managing a website's crawl budget, preventing the indexing of irrelevant content, and protecting sensitive information.

Q3. What does robots.txt do?

Ans: A robots.txt file directs bots on which pages they can crawl, helping to prevent server overload and protect private content.

Q4. What is the use of robots.txt in SEO?

Ans: A robots.txt in SEO helps block non-SEO-friendly pages, manage duplicate content, and improve crawl efficiency.

Q5. What is Disallow in robots.txt?

Ans: The Disallow directive blocks bots from accessing specific pages or directories on a website.